Think about a cluster built by Server virtualization platform "vSphere". This cluster purpose is to pool up all the resources of available ESXi hosts running within the cluster.

Background

How can we build the cluster?

Of-course, through vCenter Server you can build the cluster. For more information about vSphere HA and Cluster object, you can read my last article for "Understanding vSphere HA".

In-short, vSphere HA is used to restart VMs (on surviving host in a cluster) if any disastrous situation happens to the ESXi host either Hardware issues, Network isolation etc.

So, cluster is a separate vCenter server object the vSphere HA or DRS. As vSphere HA or DRS are the services those run on top of cluster object and try to maintain resources amongst ESXi host in it.

Definition and Concepts

DRS is the short name of Distributed Resource Scheduler. This is the service that runs on top of the cluster of ESXi hosts and keep an Eye on VMs need for resources like

- CPU

- Memory

- Storage IOPs

- Network Bandwidth

DRS is a vCenter Server service that runs maintains vCenter Server object "Cluster". Each cluster got separate DRS configuration.

With the introduction to vSphere version 7.x and above DRS focuses on VM centric resource utilization dividing VMs into Happy (>80) and Un-Happy (<60) VMs scoring. You can see this scoring is about how VMs are having their desired resources (mentioned above) running on top of relevant ESXi host in the cluster.

So, if a VM is not having a good score than that VM is considered / marked not happy on relevant ESXi host and needed to be moved to next available (Qualifying) ESXi host depending on DRS configurational Settings. These setting we shall discuss below

Requirements

DRS is always required if we want vSphere Management to take control of resource management and meet the SLA of "Applications" running on top of our virtualization platform. In this case, below are some requirements we always need to follow and focus

- Enterprise Plus License for vSphere (May change in future but for DRS full functionality, this license is needed)

- vCenter Server - DRS is a vCenter Server service

- Cluster object must be configured before DRS enablement - becuase DRS always works with Cluster

- Shared Datastore - for Compute migration using vMotion amongst ESXi host in a cluster

- vMotion VMKernel Configuration - is a must without this configuation only Manual DRS config works

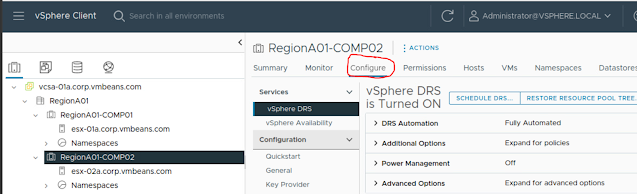

IF you see in the picture that DRS is already configured but you can do configuration by clicking Edit option available in the same interface as you can see below

Once, you click edit, the same configurational page will be appeared that can be used to reconfigure the DRS service on selected cluster object.

in the Edit DRS page you will see numerous configurational settings as you can see below

So, DRS is configurable in 3 different modes / levels

Manual Level

This is the default and first option offered by DRS that does not require you to configure vMotion and it only provide "Recommendation" for workload movement based on their demand for resources.

Partially Automated

This is the level in which it covers "Manual Level" feature which is "Recommendation only" and it also gives you option of "Initial Placement" which means before the startup of a workload/VM DRS decides which ESXi host is good to entertain the workload/VM in the cluster. So this decision capability is the part of this level.

Fully Automated

This level is always "Recommended" and it require all the requirements mentioned above in the requirements section. I provide feature sets of above 2 levels and automatically moves the workload as required by the system and needed.

Migration Threshold

This option is required to be configured that how often vMotion should be done if DRS level is set to Fully Automated. Because vMotion has always got a cost in terms of Migrating VMs from one host to another with respect to Bandwidth utilization in between source and target ESXi host.

It is always Recommended to put the ball in between Neither so Conservative nor so Aggressive.

Conservative

In this level of threshold, if a VM need resources then DRS will not immidiately respond instead it would wait longer so that resource spike may settle which could result in delay providing resources when needed by VM

Aggressive

In this level of threshold, if a VM got a slight spike of resource high utilization then a vMotion (VM migration) going to happen which could result massive level execution of vMotion keeping VMs move back and forth at all times

So, moderation is the Best policy!!

Predictive DRS

As you know that DRS by nature is a Re-active service so as VM got resrouce spike only then it responses back but now its nature got more of Pro-active as well.

If you enable this option (that works well with VMware Aria Operation older name vRealize Operations) so, its look into the predictive Analysis of VAO/vROPs or vCenter server provided logs as well and moves the VM if needed based on time based resource utilizations like for example a VM with Active Directory services require more during Office startup time when employees are walking into the office and start logging in. So, VM must be provided good resoruces during 900hrs till 1100 or depending on number of logon requests to AD

Virtual Machine Automation

If you enable this option or check box checked then you can add "Exceptions" for VMs in the cluster to remove such VMs from DRS rules and implications. Like you can add vCSA VM not to be affected in a cluster having Management and compute workload al-together.

So no Affinity and Anti-Affinity rule would be applied to such VMs when rules are actually applied to the whole cluster. In this case, VMs are consider to be configured at separate Level of DRS and consider Exceptional VMs

I will come up with practical demonstration on my YouTube Channel. If you haven't Subscribe than please do have a look and subscribe.